I'm probably wrong, but...

Don't forget Australia! Scaling website out to other continents

In my previous blog post about fnbr.co - a Fortnite cosmetics directory for which my job is to make sure the site is available, responds fast and update the backend code when necessary - I documented the rapid growth the site experienced and scaling challenge that came along with it. In this post I'll cover my usage of Cloudflare workers.

A new challenge

I am pleased to say, the solution I created in that post works fantastically and hasn't struggled under load yet since it came online about a month ago.

However, I have never been quite happy with the way the site serves visitors outside the United States. The servers were originally put there for the simple reason that the US has the highest proportion of traffic to the site versus other countries and the servers are on the East Coast meaning Europe doesn't get a bad deal either.

But that clearly misses out the APAC region and the large elephant in the room, primarily being Australia.

Everyone knows Australia has very slow and very, very expensive Internet, this issue is compounded for servers, which are mostly populated in Sydney. Bandwidth is at a premium and there are unfortunately very few undersea cables connecting Australia to other continents such as Asia and North America.

Although I have used DigitalOcean (referral link, gives you $10 free sign up credit and gives me free credit too) for all of fnbr.co's servers up until now, I knew that wasn't an option here as they are severely lacking a presence in the entire APAC region, only having Singapore available for use. This is despite a long running request on their feedback site dating back to 2013!

I wanted to avoid dedicated hosting if possible as this would be quite rigid and eliminate most of the benefits I take advantage of with a cloud provider, such as hourly billing and API triggered deployments, not to mention it is astronomically more expensive.

I did look at and try hosting in Singapore as it is more readily available, cheaper and on DigitalOcean, but the latency and load times to almost everywhere in Australia were unacceptable in my opinion, furthermore they were only just faster than going to New York!

This is probably due to how Sydney-centric the Internet is in Australia and the lack of submarine cables linking to Singapore not to mention poor infrastructure within Australia itself.

So I snapshotted my progress for use in Europe at a later date and abandoned the idea of using Singapore, I'd have to use an actual Australian host.

There are still a few cloud providers in Australia, Google, Microsoft and Amazon are all available at least in Sydney if not also in Melbourne and Brisbane. However the pricing doesn't really fit the budget for this project so I went to my backup plan, which was Vultr - again a referral link.

They have the same pricing structure as DigitalOcean and I had used them in the past so quickly spun up a Sydney server to start the setup process.

In hindsight I should have used a droplet in the UK and snapshotted it over there, so I wouldn't be working with high latency during setup, which was painful.

I do have Ansible configurations for setup but they didn't seem to like the Vultr server configurations for some reason.

How to route traffic?

Routing requests from users to servers closest to them was always the biggest hurdle for this project. I looked at a few options:

- Geographically aware DNS

- This is where a DNS response would be different depending on where you are, so in Asia you would get the IP address of an Asian server - such as Singapore

- Ultimately this wasn't chosen mainly due to cost although it would have been the simplest solution on the surface.

- Geo prefixed sub domains - e.g. eu.fnbr.co, au.fnbr.co etc.

- This wasn't chosen as it provides a poor user experience and requires an initial trip to an origin, potentially far away to redirect the user appropriately

- It also isn't very 'shareable'

- Code at the edge

- This is where I could run some code at the 'Edge' of a CDN, fly.io offer this as do Cloudflare with their new workers product.

- After evaluating costs I chose this option

GeoDNS would have been the simplest option but there are a limited number of providers, unless you want to roll your own like Wikipedia (no thank you!) one option suggested to me by Oliver Dunk was DNSimple, unfortunately, I was unable to use it due to also using Cloudflare for DNS.

Other providers worth a mention are AWS Route 53, NS1, Constellix and Dyn.

Cloudflare also have a GeoDNS offering, but like most others is billed by number of queries, their pricing structure is quite steep and also hard to estimate, in my opinion. Furthermore I don't need (or want) every route going to the closest server for technical reasons, as my app servers in other regions are read only Redis slaves, therefore cannot read from the main database or write to the cache. So only a select number of high traffic, cacheable routes should be served locally.

Enter Cloudflare Workers

Cloudflare workers is a relatively new product, it allows you to run your own code at all 150+ of Cloudflare's edge locations globally. It is similar to AWS Lambda in that it is "serverless"code execution. You can read the release blog post here.

The concept of serverless code is very powerful with a lot of potential uses but I won't go into that here.

Cloudflare's version of it is billed by number of requests executed and costs costs $5 per month to enable, which includes 10 million requests and $0.50 per additional million. Crucially however, it can be enabled on a per-route basis allowing me to keep costs low.

The logic.

The worker started out simple, I looked at the documentation and made the worker read the CF-IPCountry header from each request and match that up to country.io data for continents.

Then I simply fetched the request on the server closest to that continent and passed it back to the user.

The beautify of this is that it is all ran at the 'Edge' aka. as close to the end user as possible.

I ran some tests and asked some people in Australia to tell me their load times and both they and I were astounded with how fast the response times were.

As I alluded to earlier, only a select number of routes are enabled to keep costs down, the API, for example, is still served from New York as of the time of writing.

What if something goes wrong?

After the worker had been active for a few hours I suddenly thought "What if something goes wrong?" - that would be the Sydney server is either overloaded or goes offline completely, this would be a disaster as it would effectively mean two entire continents would be unable to access the website.

I had a search around and found very little code for workers, but I did find this which gave me the idea of using a timeout.

So now, if the request to the closest server takes more than a predefined amount of time the worker will attempt to request the page from the main servers in New York and serve this, all seamlessly to the user - albeit with a slight, unavoidable delay.

Mission Accomplished.

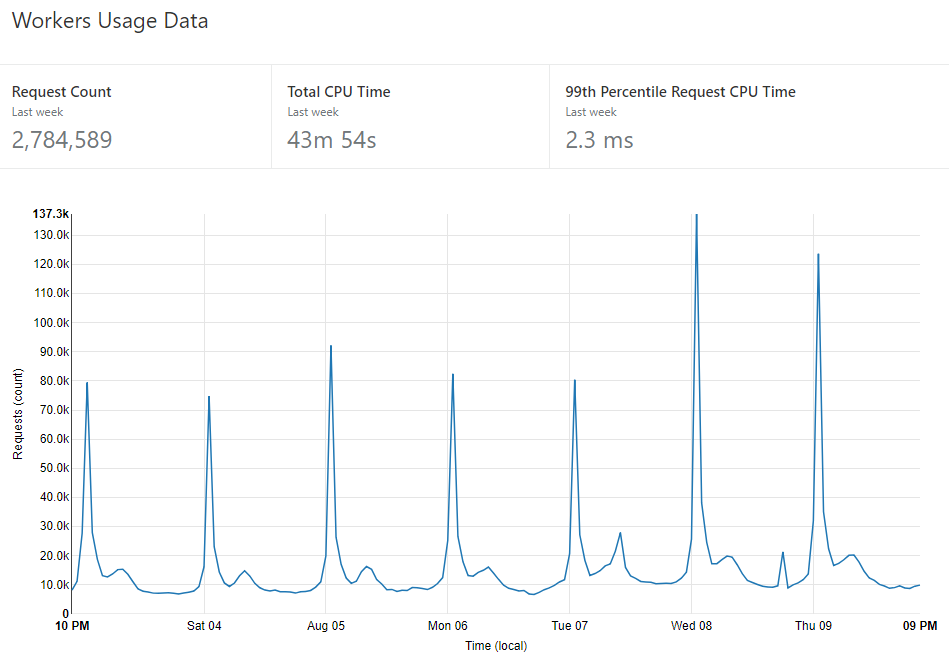

Worker usage for a week after activation

As you can see above, the worker has a distinct peak everyday at 1am UK time, and another, smaller spike in the morning European time.

According to Analytics from both Cloudflare and Google Analytics, the top 3 countries in order of users per month are: United States, United Kingdom and Australia.

Two of those three now had a server in the region, the third one, the United Kingdom (and in a wider sense, Europe) now needed something.

As the worker is billed per execution at a rate of $0.50 per million, and not by the outcome of my code (whether the user is routed to Sydney or New York) it seemed like a waste not to try and improve performance for other countries too, as the only cost would be the extra servers.

So I used my snapshot from previous testing in Singapore and fired up a 'replica' in Amsterdam on DigitalOcean.

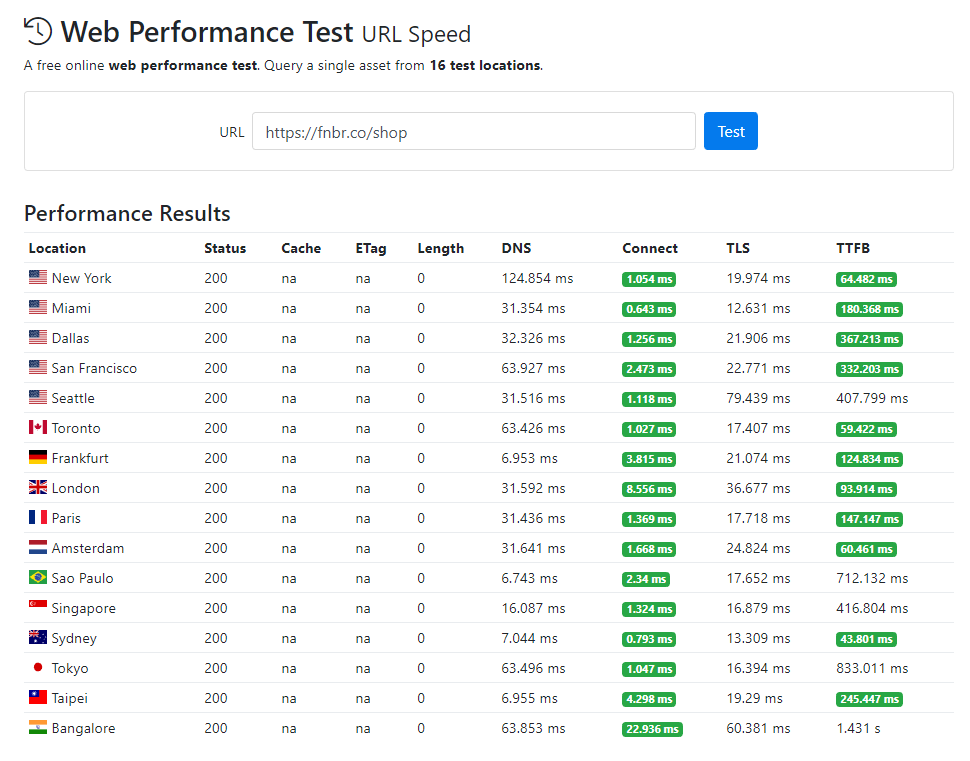

TTFB by location

Above is the TTFB (Time to first byte) time by location, using KeyCDN's performance test.

The worker is functioning great, each of the two replicas have a local Redis instance which is slaved to the master in New York. These are read-only meaning the data cannot be edited locally and it is automatically updated when a change is made at the master.

My next aim is to have a West Coast USA server, however it isn't possible to determine which specific Point of Presence the worker is executing in currently, I am limited by the CF-IPCountry header which only tells me the country.

Further to this, I want to investigate using the worker to cache the shop locally in each PoP and bypassing if the user is logged in, currently workers don't have a great way to access data from an outside source or store it.

Thanks for reading, I hope you found this interesting and of course if you have any questions feel free to comment below.